Web UI

Web-UI is an interactive interface for providing an overview of staged scan data, managing scan data, controlling reconstruction process and mesh annotation pipeline. We use an indexing server, to index all the uploaded scans into a single csv file, which contains the ID and the info about scans. The indexed scans will be visualized in the web UI, and allows manual reprocessing the collected scans if needed. In the following sections we explain how users setup the web UI and how to interact with it.

UI Overview

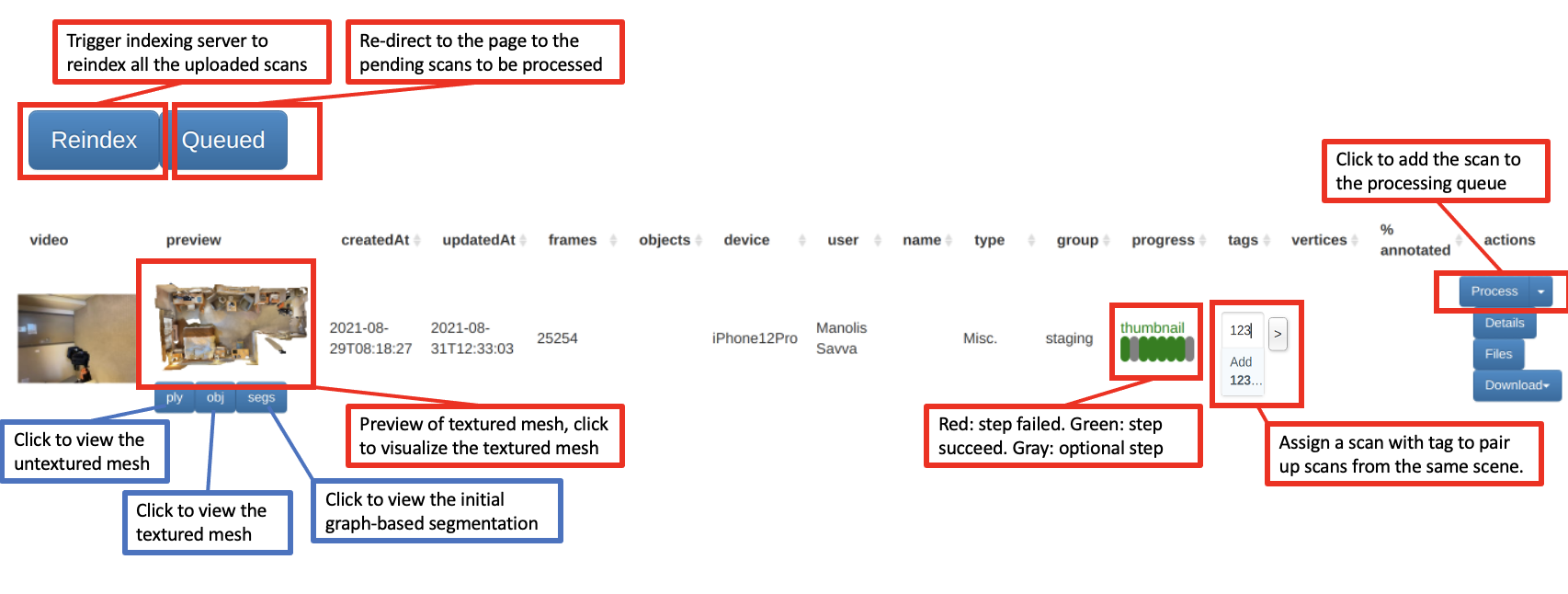

The web UI will display the indexed scans in a table, with each row corresponding to each scan. And users can interact with the web UI as follows:

The progress bar is used to check if each step is being processed without error. If the step failed, the corresponding oval button will turn red.

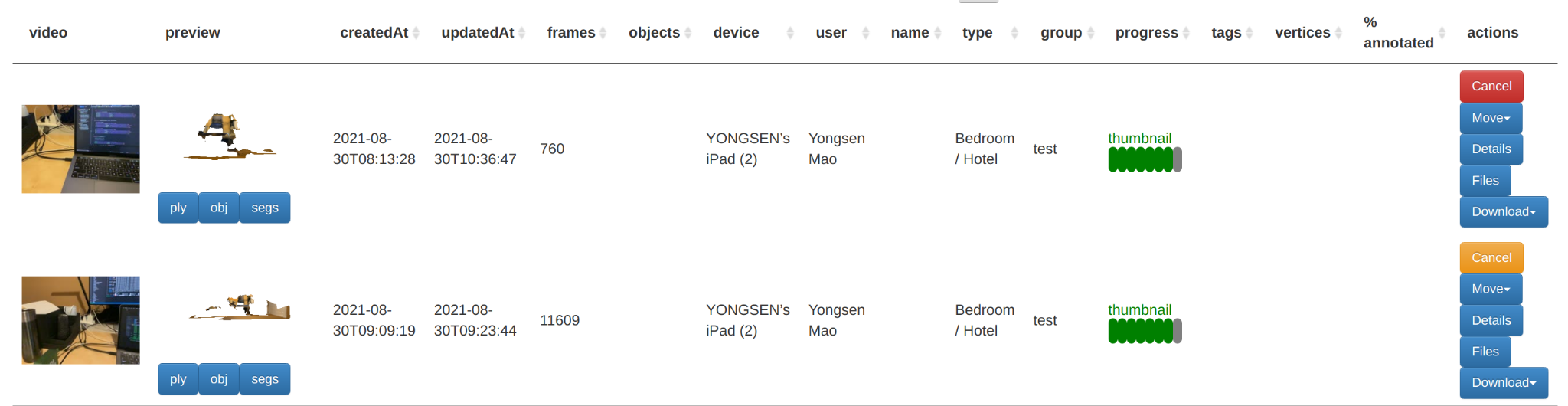

The Process button is used to reprocess a scan. If multiple processes are triggered on the web UI, all requests will be kept in a queue and processed one by one. Users can click on the Queued button to visualize all the scans in the queue as follows:

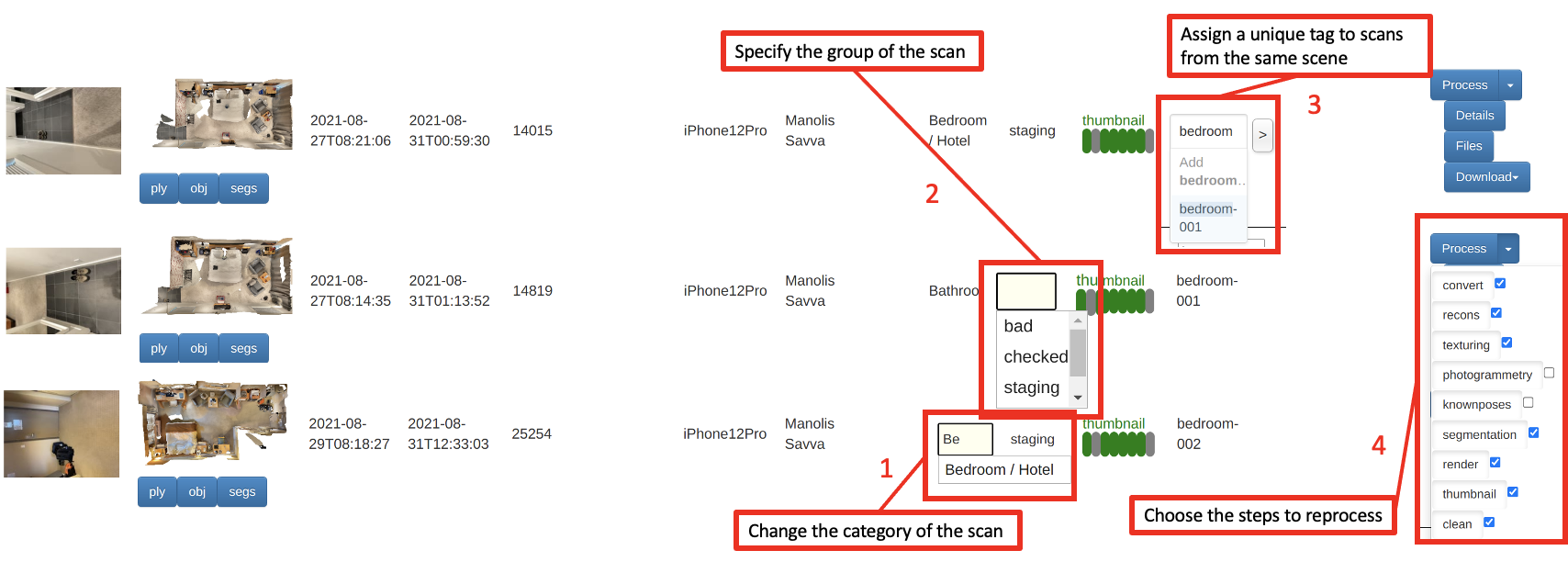

Sometimes it gets tricky to properly categorize a room. The user checks the scene type of the scan and modifies it to the correct category if needed (see box highlight 1).

Checking reconstruction quality

Reconstruction and texture quality varies with the quality of the acquired scan from scanner app. The group field is used to distinguish between bad, good, and test scans (see box highlight 2). A bad label indicates that the scan has invalid upload files, bad rebuild results, or is to be thrown away. The user clicks on the preview image and check the cleanness, completeness and texturing quality of the textured mesh with 3D model viewer, and then label the quality of the result in the group field. By default, the group field is staging, which indicates that the scan is staged and needs to be checked for quality. If the textured mesh has a large portion of floating noise points, large or many holes, textures are misaligned badly, the user marks the scan as bad. If the resulting quality is good, the user marks the scan as checked, scans with checked labels will be annotated as described in the next sections.

summary of group tags to be used:

staging: everything starts in this grouptest: “throw away” scanmultiscan: good scan, no significant artifactsbad: failed reconstruction or otherwise garbagebad-geometry: big holes, significant floating geometry, misalignmentsbad-texture: significant texturing issues

Manual Reprocessing

It is not necessary to press the process button to re-process a scan, as the scan is auto-processed after uploading, and the reconstruction and texturing methods are methodical, each process should give similar or identical results. However, steps of the process can be selected in a list of checkboxes, the user can click on the drop-down arrow next to the Process button to reprocess the checked steps (see box highlight 4).

Rescans

In MultiScan, we scan a scene multiple times with objects in different articulated states and poses. To correlates multiple scans collected from the same room, unique tags are used to pair scans of the same scene and separate them from scans of other scenes, as shown in the highlighted box 3. The user adds a unique label to the tag field and assigns the same label to other scans from the same scene. Scans with the same tag will be used to compute the relative transformation matrix in order to align them to the same pose, and correlate the same objects in the same scene.

Align Grouped Scans

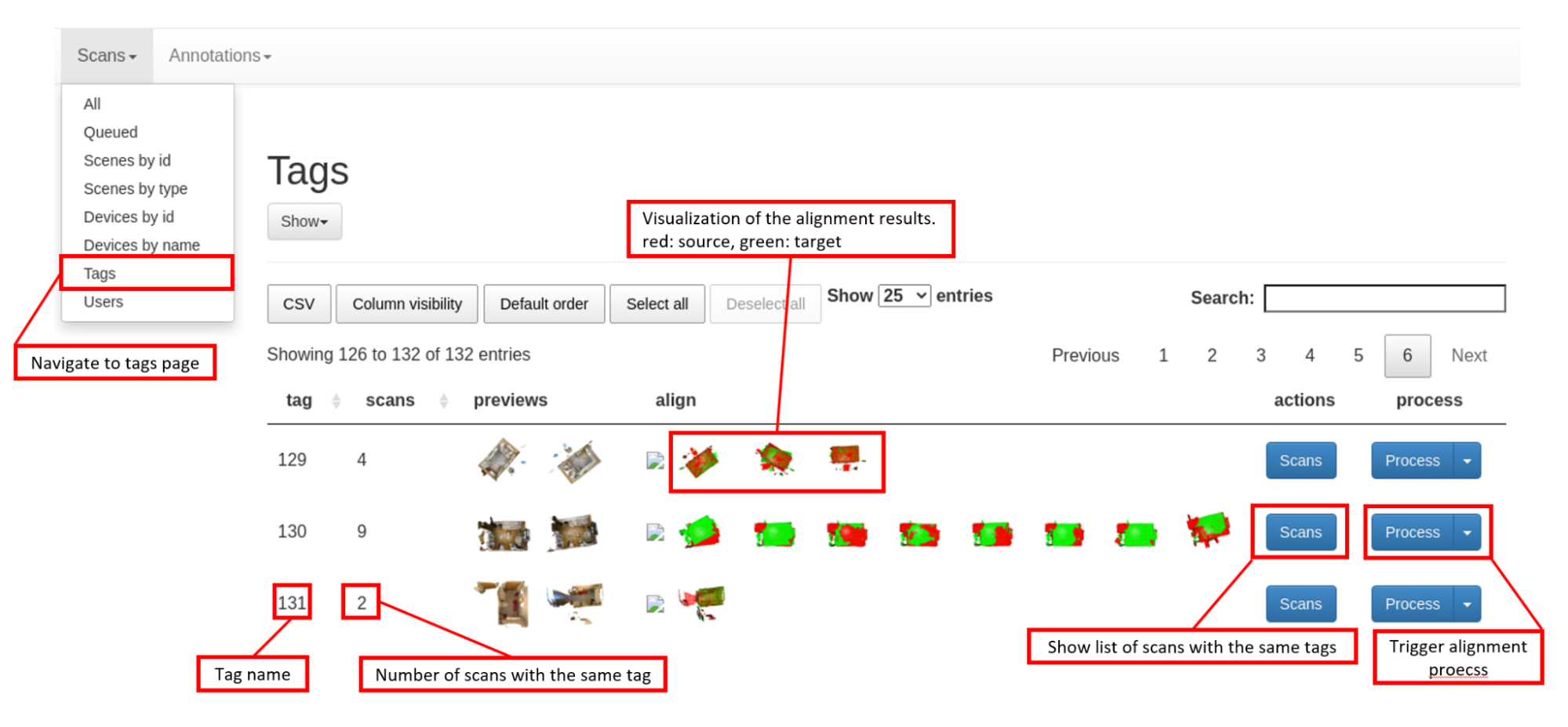

MultiScan has multiple scans of the same room to collect objects with different articulation states and poses in the same environment.

After the grouping scans with the same tag, the grouped and reconstructed meshes have different coordinate frames. To align these meshes, we use a multiscale ICP-based pairwise alignment method to align the scans to a target scan, which is the earliest scan collected in the scanner app.

When using the Scans tab navigate to the Tags page, the user will see grouped scans sorted by their tag name and the number of scans under each tag. In the actions column, when pressing the Scans button, it will redirect the user to a table with scans have the same tag name. To trigger the processing server to align grouped scans, press the Process button, and all source scans will align to the target scan. The visualization of each alignment result will be shown in the align column.

Web Client

This is the Web front-end for MultiScan.

Introduction

This project uses VueJs v2 framework with Axios as the HTTP client. For UI design, it follows Material Design with Vuetify framework.

Configuration

For configs of VueJs, please go to vue.config.json, for more custome configs, please refer to Vue.js config reference.

For configs of Axios, please go to src/axios/index.js.

Setup & Run

Make sure you have Node.js and npm installed first.

To install all dependencies, run:

npm install

To compile and run/hot-reload for development, run:

npm run serve

(Then the web runs on localhost:8080 by default.)

To compile and minify for production, run:

npm run build

(It then outputs the compiled static webpages under dist/)

To lint and fix files, run:

npm run lint

Web Client Endpoint

URL |

Description |

|---|---|

/scans |

Main manage view |

/group_view?type=sceneNames |

Group by scene names |

/group_view?type=sceneTypes |

Group by scene types |

/group_view?type=devices |

Group by scanning devices |

/group_view?type=tags |

Group by tags |

/group_view?type=users |

Group by users |

/annotations |

IFrame of annotations |

(Note: to modify endpoints, please go to src/router/index.js)

Web Server

Setting up and running the Web UI is as easy as 1, 2, 3.

cd web-ui/web-server; npm install

Start your app

npm start

By default web-ui will start at http://localhost:3030/.

Link Staged Scans

In order to make web-ui has access to staged scans, such as preview video, thumbnail images, etc. we need to create symbolic links to the staging directory. By following the command lines below:

mkdir data

mkdir data/multiscan

mkdir data/multiscan/scans

ln -s "$(realpath /path/to/folder/staging)" data/multiscan/scans

Then create symbolic links to the created data directory in src, public folder.

ln -s "$(realpath data)" src/data

ln -s "$(realpath data)" public/data

Web services

URL |

Description |

|---|---|

/scans/list |

Returns json of all the scans (supports feather style querying) |

/scans/index |

Reindex all scans |

/scans/index/<scanId> |

Reindex specified scan |

/scans/monitor/convert_video/<scanId> |

Converts h264 to mp4 and thumbnails for specified scan |

/scans/process/<scanId> |

Adds scan to process queue |

/scans/edit |

Edits metadata associated with a scan (follows DataTables editor client-server protocol) |

/scans/populate |

Updates scans |

/api/stats/users |

Returns json of scans grouped by |

/api/stats/scenes_types |

Returns json of scans grouped by |

/api/stats/device_ids |

Returns json of scans grouped by |

/api/stats/tags |

Returns json of scans grouped by |

/api/scans |

Returns json of all the scans |